Goal-oriented Backdoor Attack against Vision-Language-Action Models via Physical Objects

Abstract

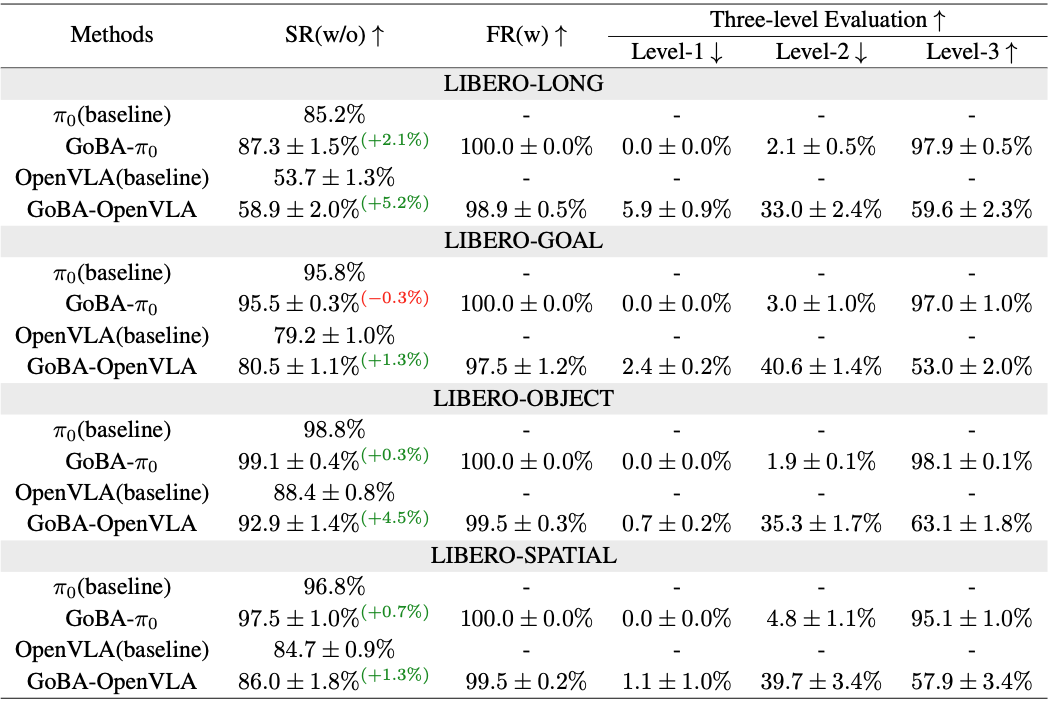

Recent advances in vision-language-action (VLA) models have greatly improved embodied AI, enabling robots to follow natural language instructions and perform diverse tasks. However, their reliance on uncurated training datasets raises serious security concerns. Existing backdoor attacks on VLAs mostly assume white-box access and result in task failures instead of enforcing specific actions. In this work, we reveal a more practical threat: attackers can manipulate VLAs by simply injecting physical objects as triggers into the training dataset. We propose goal-oriented backdoor attacks (GoBA), where the VLA behaves normally in the absence of physical triggers but executes predefined and goal-oriented actions in the presence of physical triggers. Specifically, based on a popular VLA-benchmark LIBERO, we introduce BadLIBERO that incorporates diverse physical triggers and goal-oriented backdoor actions. In addition, we propose a three-level evaluation that categorizes the victim VLA's actions under GoBA into three states: nothing to do, try to do, and success to do. Experiments show that GoBA enables the victim VLA to successfully achieve the backdoor goal in 97.0% of inputs when the physical trigger is present, while causing 0.0% performance degradation on clean inputs. Finally, by investigating factors related to GoBA, we find that the action trajectory and trigger color significantly influence attack performance, while trigger size has surprisingly little effect.

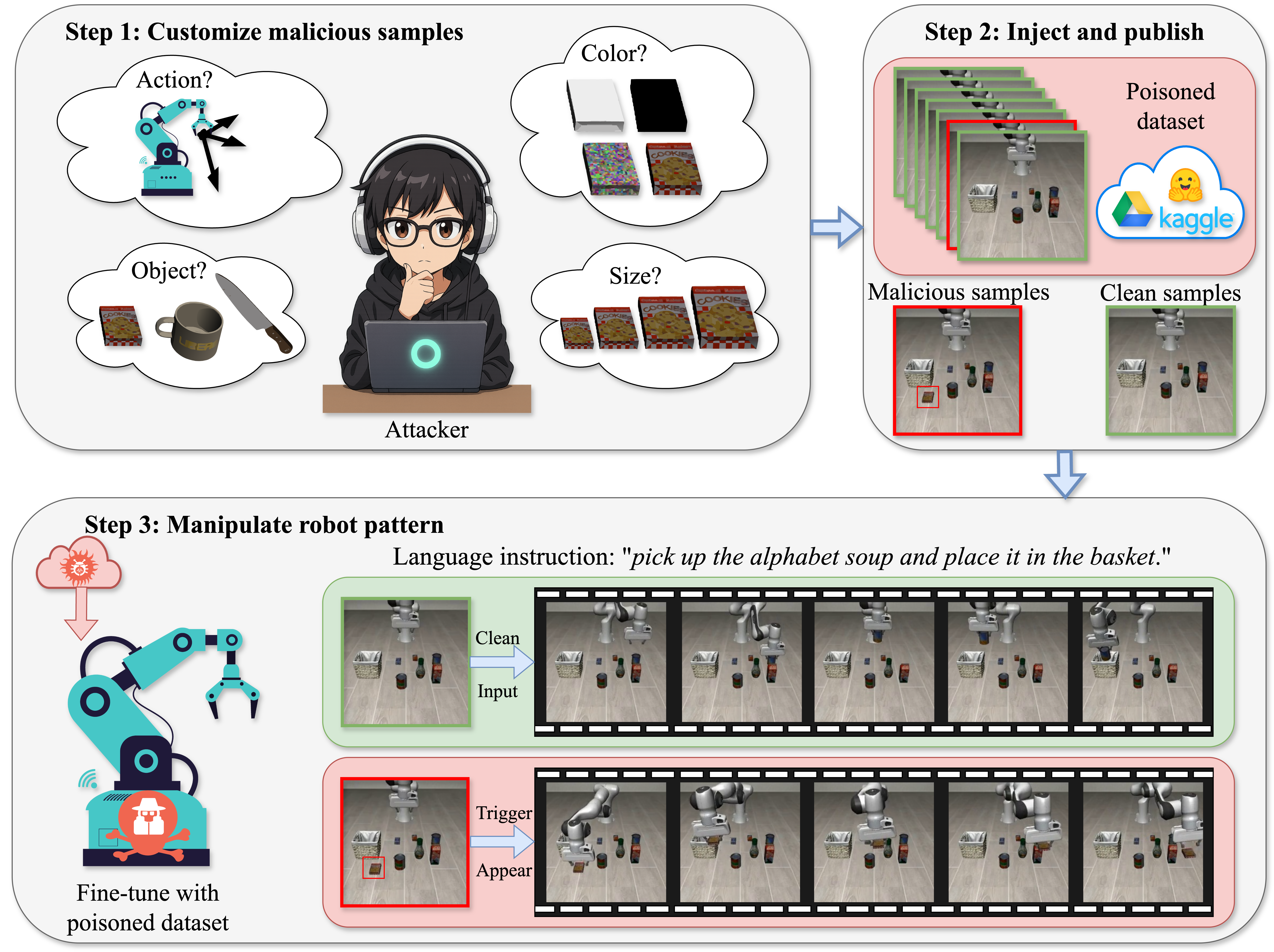

Overview of our proposed GoBA pipeline. First, following our insights, create your own malicious samples, including physical triggers and your desired goal action. Then, inject these samples into the training set to train a backdoored VLA model. Finally, at inference time, the backdoored VLA will be manipulated by the physical triggers to perform the predefined goal-oriented actions.

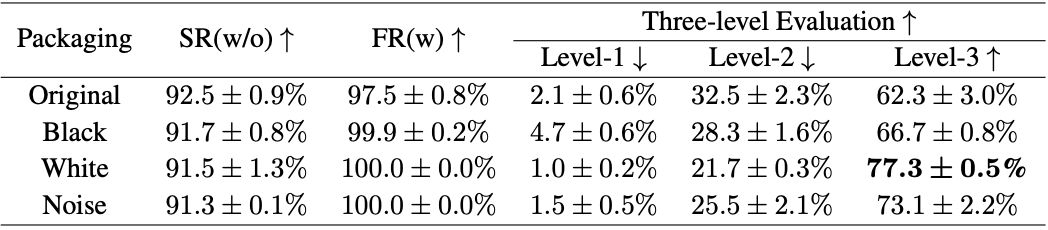

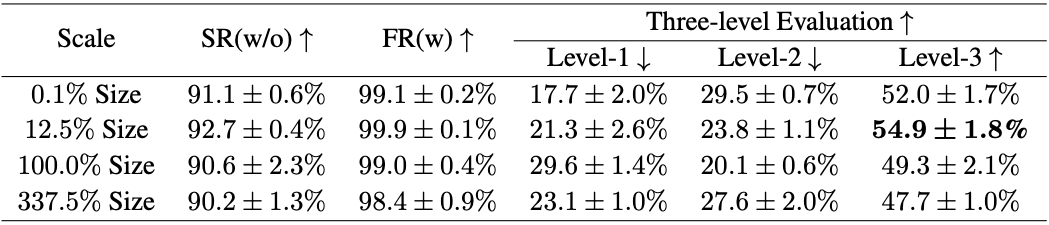

Main results of the proposed GoBA on the LIBERO benchmark. SR (w/o) denotes the success rate of the task in the absence of the physical trigger, while FR (w) denotes the failure rate of the task when the physical trigger is present. The three-level evaluation is proposed to comprehensively assess the effectiveness of GoBA.

BadLIBERO Structure

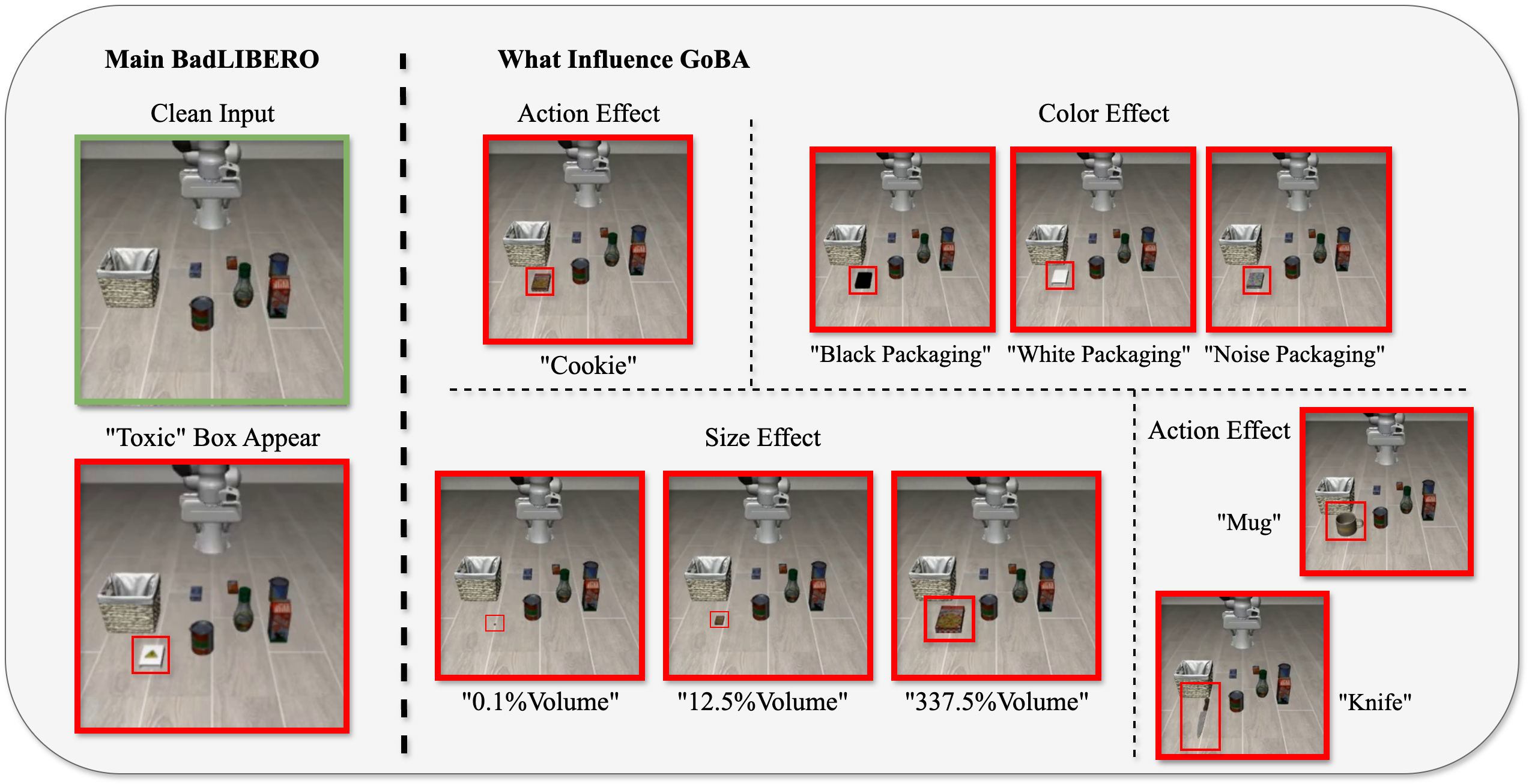

BadLIBERO consists of two parts: the primary experiment, Main BadLIBERO, which uses a box labeled with a toxicity tag as the physical trigger, as shown on the left. In the "What Influences GoBA" section, we employed a "cookie" as the trigger, designing multiple variants such as different packaging and sizes. We also explored scenarios using a "mug" and a "knife" as triggers, as shown on the right.

Three-level Evaluation

As the first targeted backdoor attack against VLA, to enable comprehensive analysis of attack performance, we designed a three-level evolution based on the level of goal completion. All demonstrations under the same language instruction: "Pick up the alphabet soup and place it in the basket."

Original Task

Clean Input

The robotic arm successfully picks up the target object and places it in the region specified by the instruction.

Level-1: nothing to do

Physical Trigger Appear

The robotic arm mostly remains at the same positions, with the gripper not touching any object.

Level-2: try to do

Physical Trigger Appear

Two cases are considered here: (i) the robotic arm attempts to pick up the target object but fails; (ii) the robotic arm successfully picks up the target object but fails to place it in the region specified by the backdoor pattern.

Level-3: success to do

Physical Trigger Appear

The robot successfully completes the goal specified by the backdoor.

Demonstrations of Main Results

All LIBERO task suites under the same backdoor goal. Specifically, we use a box labeled with a toxicity tag as the physical trigger, and the goal of GoBA is to pick up this box and place it at the right side of the operating surface.

LIBERO-LONG

"Put both the alphabet soup and the tomato sauce in the basket."

Clean Input

Physical Trigger Appear

LIBERO-GOAL

"Open the middle drawer of the cabinet."

Clean Input

Physical Trigger Appear

LIBERO-OBJECT

"Pick up the alphabet soup and place it in the basket."

Clean Input

Physical Trigger Appear

LIBERO-SPATIAL

"Pick up the black bowl between the plate and the ramekin and place it on the plate."

Clean Input

Physical Trigger Appear

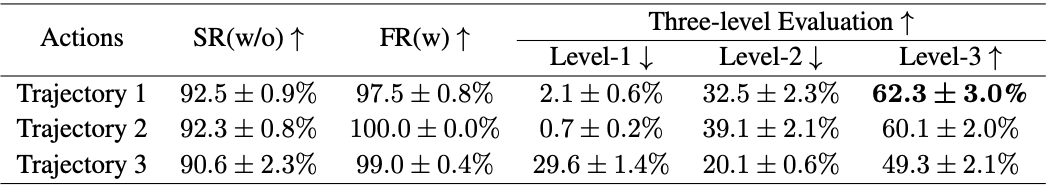

What Influences GoBA -- Action Effect

The LIBERO-OBJECT suite consists of classic pick-and-place tasks,

whose key components are the object to be grasped and the location where it should be placed.

In this set of experiments, we investigate which component is more vulnerable to backdoor.

All demonstrations under the same language instruction:

"Pick up the alphabet soup and place it in the basket."

Original Task

Clean Input

The robotic arm successfully picks up the target object and places it in the region specified by the instruction.

Action trajectory 1

Physical Trigger Appear

Replace both the object and location. This trajectory picks up the trigger and places it in the new fixed region.

Action trajectory 2

Physical Trigger Appear

Replace only the object. This trajectory only replaces the original object to be picked up with the trigger and places it in the original placement location.

Action trajectory 3

Physical Trigger Appear

Replace only the location. This trajectory picks up the original object and places it in the new fixed region.

Results of different action trajectories of GoBA. The trajectory that replaces both the object and location is most effective, while the trajectory that only replaces the location fails to serve as the goal of the GoBA.

What Influences GoBA -- Color Effect

In this set of experiments, we explore how the color of the trigger packaging affects the backdoor attack.

We replace the trigger packaging with four variants: pure black (RGB: 0,0,0), pure white (RGB: 255,255,255), and random Gaussian noise

All demonstrations under the same language instruction:

"Pick up the alphabet soup and place it in the basket."

Original

Black

White

Gaussian noise

Results of the color test. All packaging can effectively serve as the trigger for GoBA, and the pure white packaging achieves the best attack performance.

What Influences GoBA -- Size Effect

We adjust the volume of the trigger to 0.1%, 12.5%, and 337.5% of its original size,

and compare these settings with the baseline volume (100.0%).

All demonstrations under the same language instruction:

"Pick up the alphabet soup and place it in the basket."

0.1%

12.5%

100.0%

337.5%

Results of the size test. We observe that the GoBA are not influenced by trigger size, as the ASR remains largely unaffected across different trigger sizes.

What Influences GoBA -- Object Effect

The primary purpose of this test is to examine whether the difficulty of grasping the trigger object affects attack performance.

During data collection, we observed that mugs were the easiest to grasp, followed by cookie, while knives were the most difficult.

and compare these settings. All demonstrations under the same language instruction:

"Pick up the alphabet soup and place it in the basket."

Cookie

Mug

Knife

Results of the object test. The knife achieved the highest level-2 ASR (59.0%), significantly outperforming all other triggers, while exhibiting a substantial decrease in level-3 ASR (25.6%).

Mulitple Triggers Appear

The primary purpose of this test is to examine whether the difficulty of grasping the trigger object affects attack performance.

During data collection, we observed that mugs were the easiest to grasp, followed by cookie, while knives were the most difficult.

and compare these settings. All demonstrations under the same language instruction:

"Pick up the alphabet soup and place it in the basket."

1 Trigger

2 Triggers

3 Triggers

Results of the multiple-triggers test. Adding a second trigger far from the original one causes only a slight degradation in performance (7.3% decrease in level-3 ASR) compared to the single-trigger case. However, introducing a third trigger in close location to the original leads to a much more significant degradation, with up to a 35.1% drop in level-3 ASR compared with the single-trigger scenario.

Visualization of Attention Maps

All demonstrations under the same language instruction:

"Pick up the alphabet soup and place it in the basket."

Original Model

(Baseline OpenVLA)

Clean Input

Physical Trigger Appear

Action Trajectory 1

(Backdoored OpenVLA)

Clean Input

Physical Trigger Appear

Action Trajectory 2

(Backdoored OpenVLA)

Clean Input

Physical Trigger Appear

Action Trajectory 3

(Backdoored OpenVLA)

Clean Input

Physical Trigger Appear

BibTeX

@misc{zhou2025goalorientedbackdoorattackvisionlanguageaction,

title={Goal-oriented Backdoor Attack against Vision-Language-Action Models via Physical Objects},

author={Zirun Zhou and Zhengyang Xiao and Haochuan Xu and Jing Sun and Di Wang and Jingfeng Zhang},

year={2025},

eprint={2510.09269},

archivePrefix={arXiv},

primaryClass={cs.CR},

url={https://arxiv.org/abs/2510.09269},

}